As a publisher's popularity increases, the volume of comments increases, which is great! However, with higher overall comment volume comes more spam, toxicity, and other comments that don't contribute to the conversation. For many large publishers, moderation at scale can become a huge burden that obfuscates the value of comments. Some publishers spend hours moderating hundreds or thousands of comments a day. In an effort to reduce this cost, combat undesirable content, and resurface the value of comments, my team designed several moderation tools to give moderators access to more robust options while maintaining their unique moderation preferences. For this series of projects, I was the lead product designer responsible for the end-to-end product from user research and interaction design to visual design.

Note: The term 'publisher' and 'moderator' are often synonymous for smaller sites. In larger sites, a publisher is often the overarching entity that may have a moderation team that manages Disqus comments.

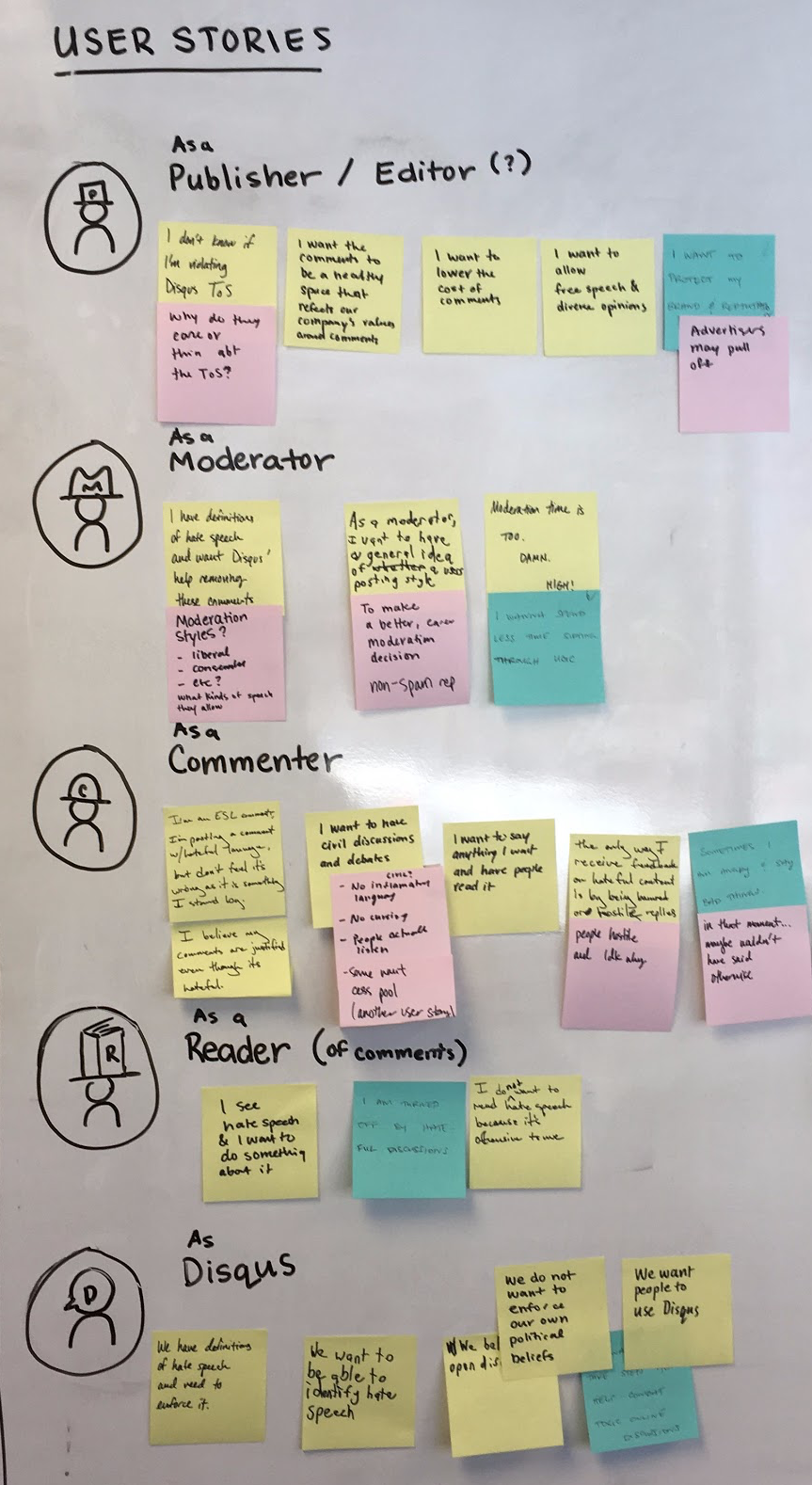

In 2017, hate speech became a big deal in the media and all eyes turned to social media and communication platforms to see how they would define and react to hate speech on their network. As one of the largest communication platforms with over 2 billion users, we knew that we had a duty to respond. I kicked off this project by getting alignment with the team around what we know, what our assumptions are, and what we want to achieve. We started off with a whiteboarding and post-it noting session with the team to think about the problems and stories that the different types of users on our network face regarding hate speech. From there, we realized that a lot of what we brainstormed were assumptions, so we looked to user research.

We interviewed several Disqus publishers about their stance on hate speech, what they thought Disqus' stance should be, and since there was a bit of an urgent timeline to publicly address our stance on hate speech, I also got early feedback on paper prototypes of a few different solutions that were based on our assumptions. From the user research, we learned that:

From this research, we publicly stated that our commitment to fight hate speech would be fought alongside publishers and that we would be aggressively building more robust tools to help. As a result, we worked quickly to create tools that publishers from our feedback sessions needed like shadow banning, timeouts, and comment policy.

While the tools we made were incremental steps toward helping relieve the burden of moderating undesirable content, we recognized that they were only incremental steps and there was still a lot more that could be done. We wanted to invest some time and research into dreaming a bit bigger to see if we could make a substantial impact to moderation.

We initially wanted to build a machine learning algorithm that could automatically learn a publisher's unique moderation preferences over time. Unfortunately, despite seeking partnerships with 3rd parties, we didn't have enough technical expertise to build a machine learning system that could do this well enough to earn a publisher's trust. Additionally, when shown this concept, we learned that many moderators deeply valued the voices of their commenters and didn't want machines passing judgment on human thought. Because of this, we decided to completely pivot away from machine learning as a solution. Instead, we explored options that would allow human moderators to remain in control.

Taking all that we'd learned so far, this is what we knew:

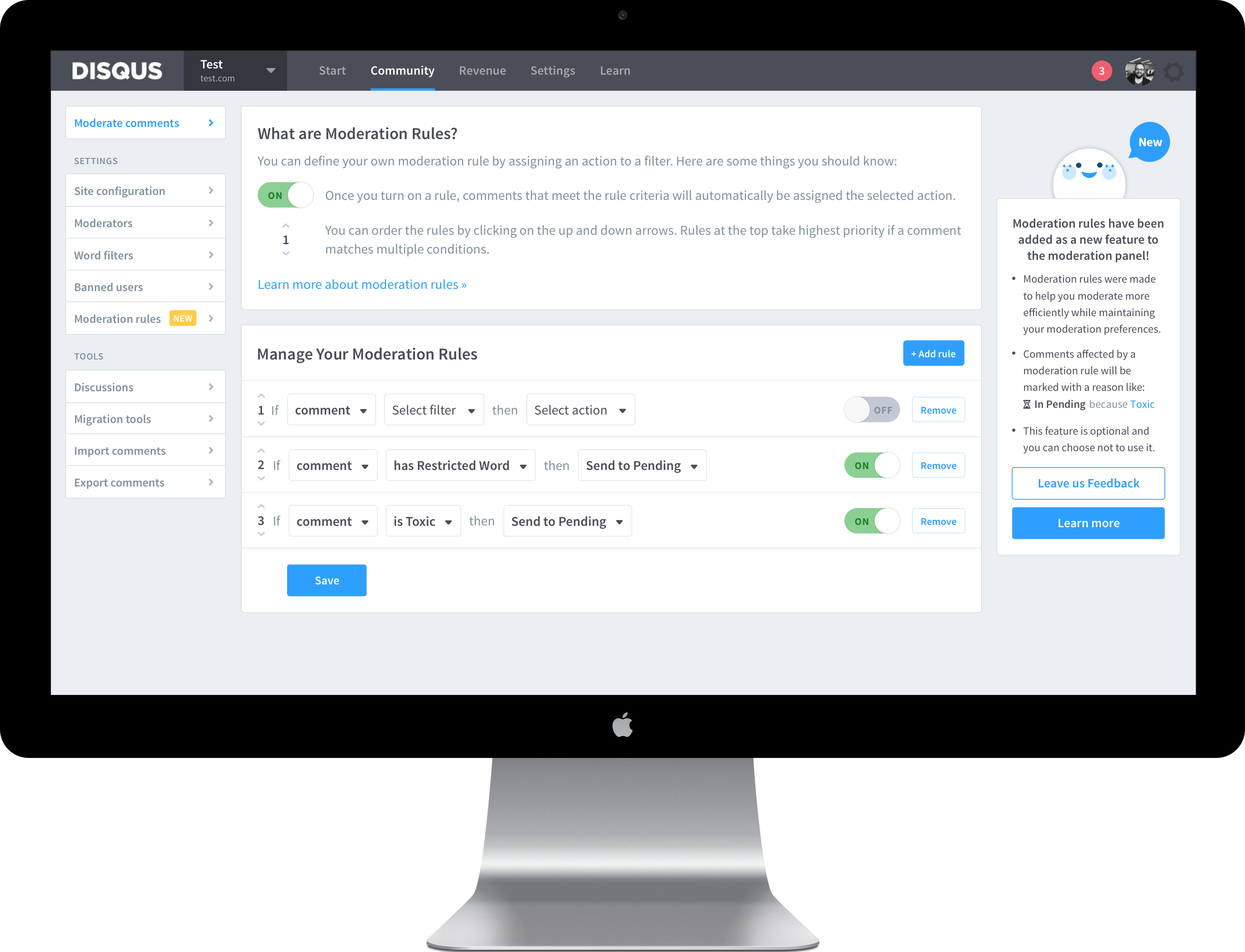

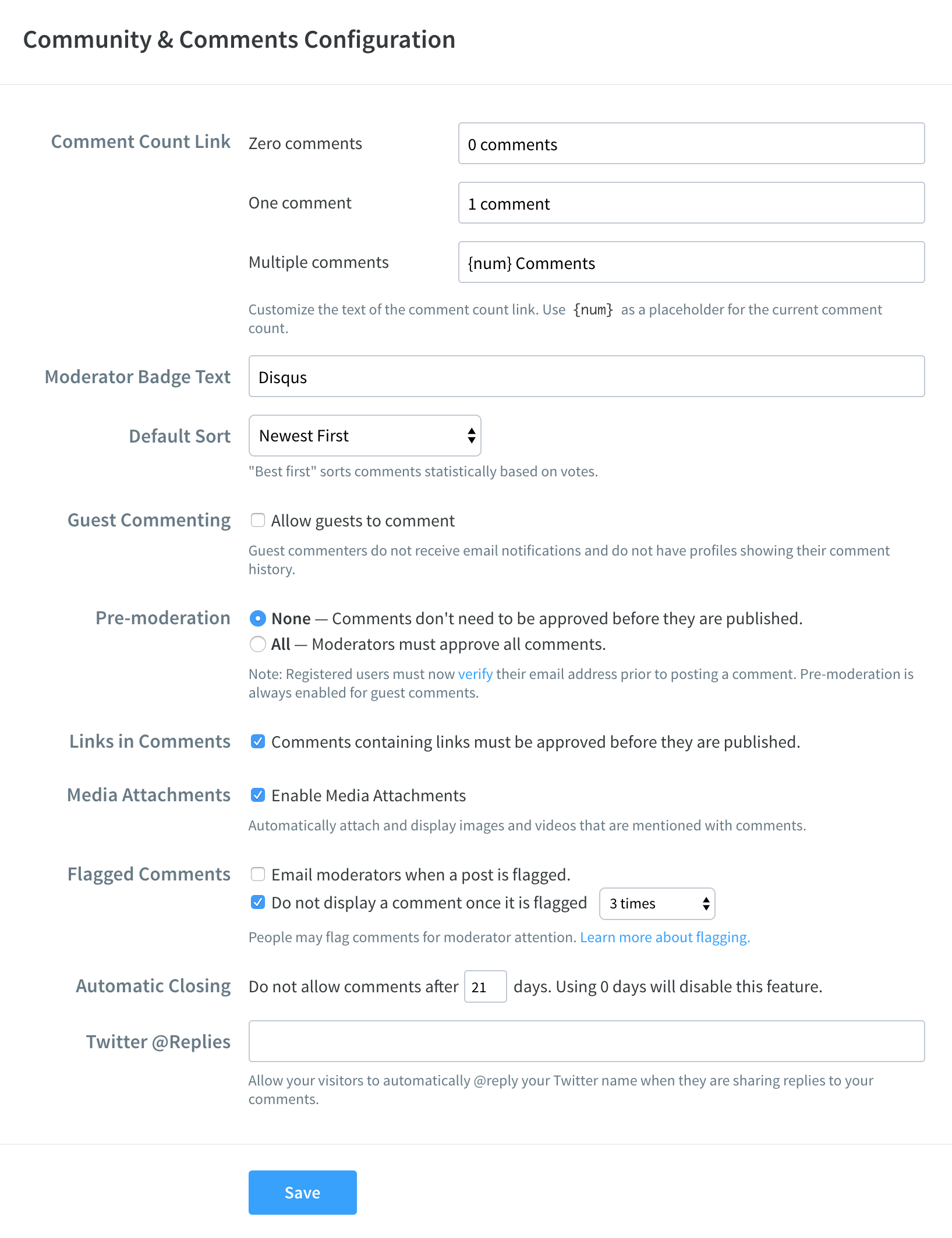

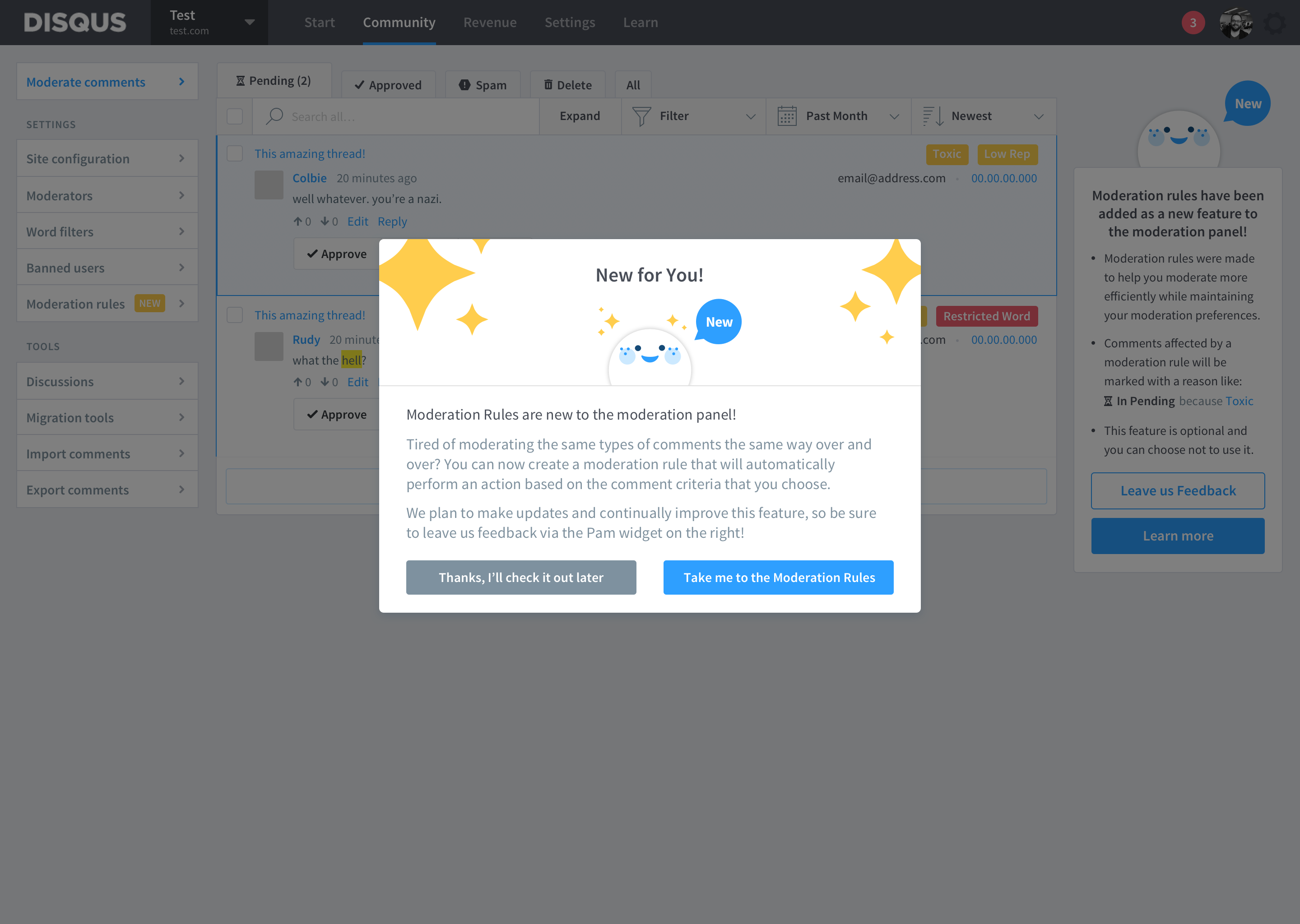

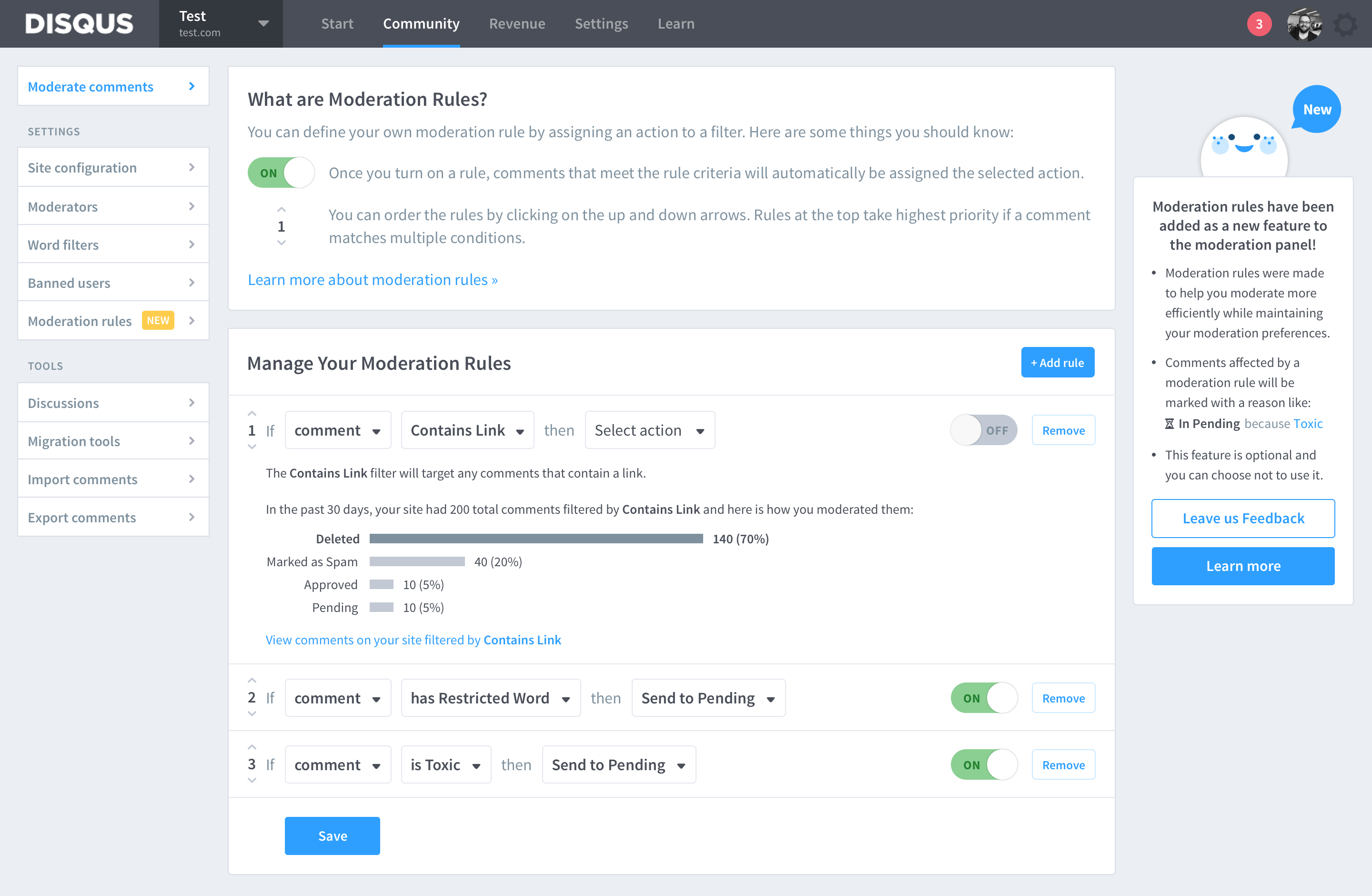

Keeping in mind that moderators want to be the ones passing judgment on comments, not robots, my team and I held a number of discovery sessions to pivot our designs. After exploring several options with a lot of input regarding technical feasibility from our engineer, we decided to design a rules-based engine called Moderation Rules. This new feature allows moderators to assign moderation actions to comments with certain characteristics. After a task-based usability test of the Moderation Rules concept with 5 moderators and an unprecented 100% success rate on our primary workflow, we decided to move forward with buliding this feature. Additionally, instead of building out an entirely robust tool with hundreds of options that were potentially not useful, we decided to opt for a beta release with an increasing rollout to gather iterative user feedback as we continue to build out the product.

Our analytics shows that even with a limited set of filters, we've already begun to see healthy adoption from certain members in our beta group, who we hope to work with closely for future iterations. So far, some of feedback from our beta group has us thinking:

Starting with the feedback above, we intend to continue iterating on the most useful parts of the product by relying on the feedback and needs of our publishers. We're so glad we were able to build a human-focused solution that reduces tedium and is a huge step for us toward building healthier, more engaging communities.